AWS finally released a Redshift cluster update last week which implemented their new Federated Querying capability. This excites the heck out of me because it means that I don’t need to replicate Postgres to Redshift with DMS anymore. It is working very well so far to query my Aurora Serverless RDS databases.

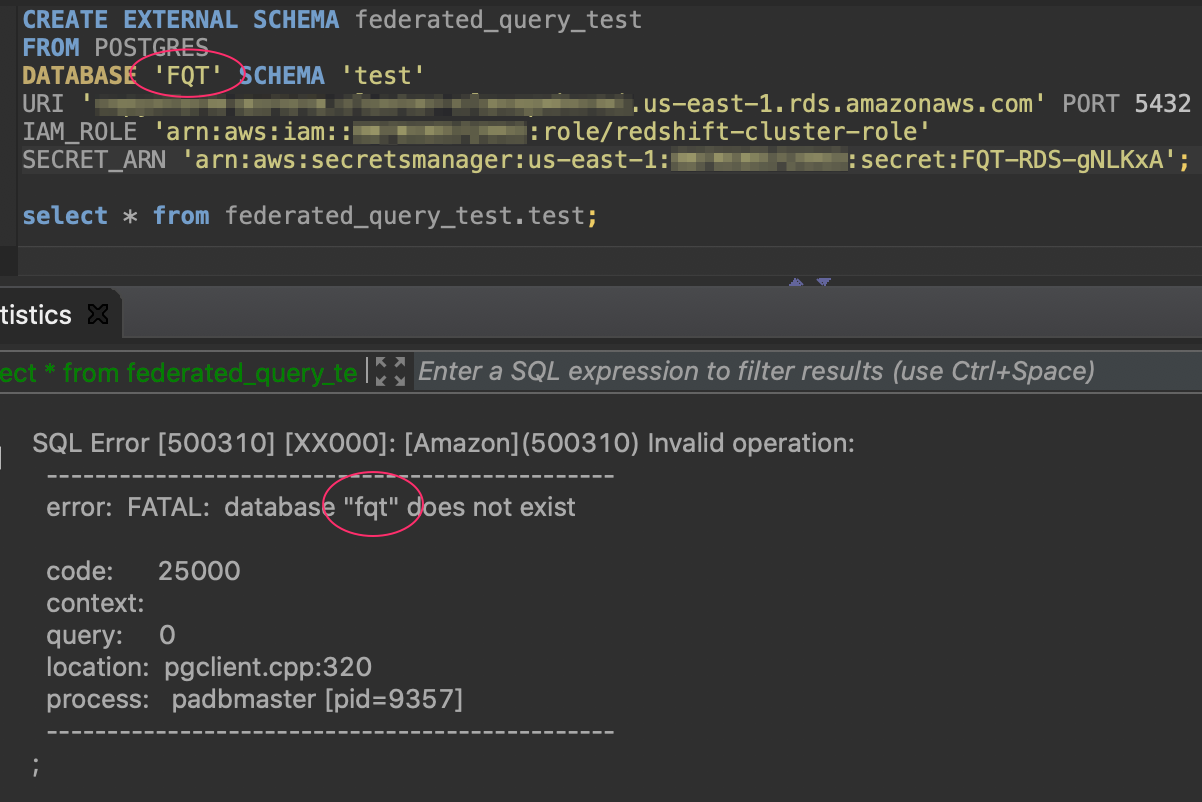

Per AWS documentation our create statement looks something like this:

CREATE EXTERNAL SCHEMA IF NOT EXISTS local_schema_name

FROM POSTGRES

DATABASE 'postgres_database_name' SCHEMA 'postgres_schema_name'

URI 'postgres_endpoint' PORT 5432

IAM_ROLE 'iam-role-arn-string'

SECRET_ARN 'ssm-secret-arn'Lets break that down:

local_schema_name : This is the name that you want to give to the external schema in your Redshift database. This can be any name you want.

postgres_database_name : This is the name of your Postgres database. This is where I hit my first roadblock… It turns out that Redshift lowers your entry here. If your Postgres database contains capital letters, your connection will fail.

postgres_schema_name : The name of your schema in Postgres on which you want to perform federated queries. Just like above, your entry gets lowered. If your schema name contains capital letters, your CREATE statement will complete, but your queries against the external schema will fail.

URI : The hostname or address of your Postgres database.

PORT: The Postgres port

IAM_ROLE : This is the IAM Role that Redshift will use. The only permissions it needs are “secretsmanager:GetSecretValue” on the resource ARN of the secret containing your Postgres database credentials. This role must be attached to your cluster, or you can use chained roles if the role attached to your cluster has permission to assume it.

SECRET_ARN : The ARN of a Secrets Manager secret containing your database credentials. The Secret’s required keys are ‘username’ and ‘password’.

If you tried this and you can now query your external schema, then congratulations. If it didn’t, then you’re in the same boat I was. Let’s do some troubleshooting:

Troubleshooting

When you attempt to run a query, you get an error like this: "SQL Error: Invalid Operation: Failed to incorporate external table into local catalog. Error= Unable to connect to endpoint with address"![]()

Well thanks to a particularly helpful fellow over at AWS support, I learned that this IP address (and many others) are the Secrets Manager service. So we know that our cluster is having difficulty connecting to Secrets Manager to retrieve the secret.

You have two options here.

1. If your cluster has access to the internet, you can open TCP 443 outbound to 0.0.0.0/0 so that the cluster can contact the Secrets Manager service.

2. If your cluster doesn’t have internet access, or if you just don’t feel like doing that, you will need to create a VPC endpoint for the Secrets Manager service in your VPC. You will need to open TCP 443 outbound from your Redshift cluster to the security group associated with your VPC endpoint. If you are unfamiliar with creating a VPC endpoint, AWS has a pretty good blog post about it.

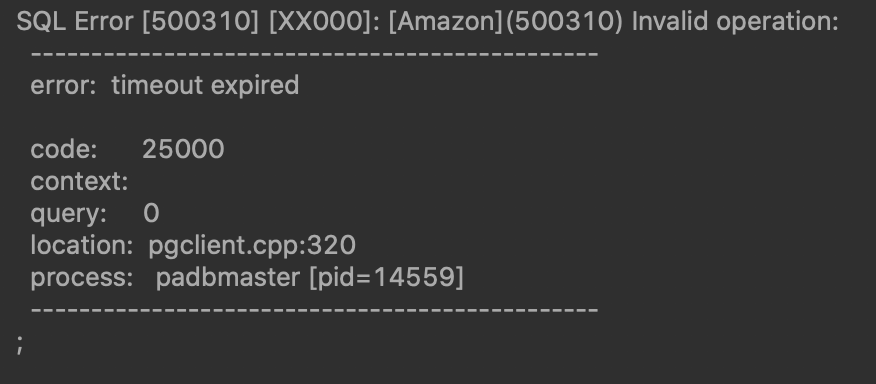

When you attempt to run a query, you get an error like this:

“SQL Error: Invalid Operation: error: timeout expired code: 2500"

This means that your cluster has successfully retrieved your Secrets Manager secret, but is now unable to connect to your Postgres database. Verify that your Redshift security group allows TCP outbound to your Postgres database server and port.

If your Postgres database is not publicly accessible, you also need to ensure VPC connectivity to it. This requires Enhanced VPC Routing to be enabled on your Redshift cluster.

Thanks for reading.